Spring/Java Application Monitoring Basics - Part 1

Let's check why spring uses micrometer jar for monitoring using http request counter example.

This content is for absolute beginners in observability and the developers who were curious to know what is behind the scenes and why.

Count the HTTP requests.

Any metric to be monitored in the application should have the value as number. It can be counter, gauge or timer etc. We shall try to count the number of requests hit for a Welcome API of the below code.

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class HobbiesAPI {

@RequestMapping("/greeting")

public String welcome() {

return "Welcome to Micrometer Tutorial";

}

}

We can use global counter and we shall increment the same for each hit. This is the processing part inside your code. It will reside inside your application until you export or expose it. So, we will have the separate API to expose the value.

private AtomicInteger counter = new AtomicInteger();

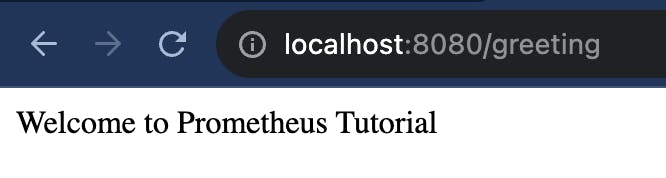

@RequestMapping("/greeting")

public String welcome() {

counter.getAndIncrement();

return "Welcome to Prometheus Tutorial";

}

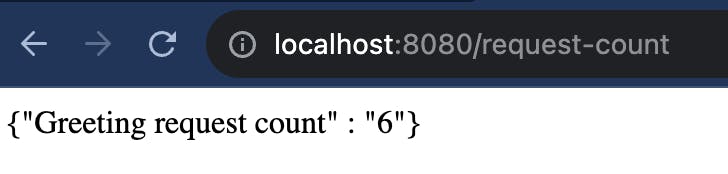

@RequestMapping("/request-count")

public String getHits() {

return "Greeting request count" + counter;

}

Nice one, It worked for the single API use-case.

Multiple API requests.

We can store the multiple counter variables or single Map variable to store counter of each APIs.

private final AtomicInteger welcomeCounter = new AtomicInteger();

private final AtomicInteger goodByeCounter = new AtomicInteger();

@RequestMapping("/greeting")

public String welcome() {

welcomeCounter.getAndIncrement();

return "Welcome to Micrometer Tutorial";

}

@RequestMapping("/tata")

public String goodBye() {

goodByeCounter.getAndIncrement();

return "Good Bye from Micrometer Tutorial";

}

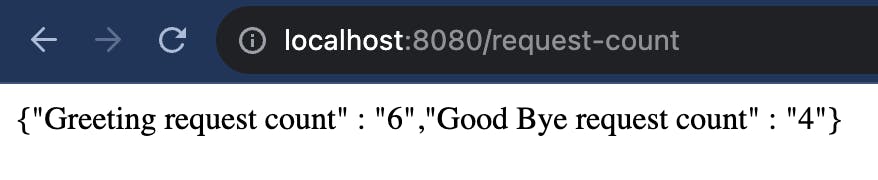

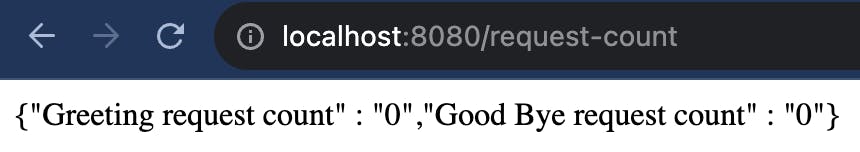

@RequestMapping("/request-count")

public String getHits() {

return "{\"Greeting request count\" : \"" + welcomeCounter + "\"," +

"\"Good Bye request count\" : \"" + goodByeCounter + "\"}";

}

API failure case

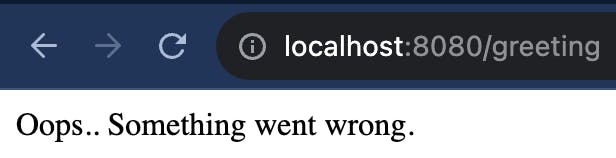

What will happen if my code throws an exception.?

Lets throw some exception. We miss the count of http requests failed.

@RequestMapping("/greeting")

public ResponseEntity<String> welcome() {

try {

Long.parseLong("adfsdhgk");

welcomeCounter.getAndIncrement();

return ResponseEntity.ok("Welcome to Micrometer Tutorial");

} catch (Exception e) {

return ResponseEntity.status(500).body("Oops.. Something went wrong.");

}

}

We can capture the failure request count. But, if we add it in overall counter, success versus failures out will be missed. So, there are few options to add the failure case.

We can append the success and failure metrics with API names.

We can introduce new field values commonly called as tags.

We choose the first one now.

private final AtomicInteger welcomeSuccessCounter = new AtomicInteger();

private final AtomicInteger welcomeFailureCounter = new AtomicInteger();

@RequestMapping("/greeting")

public ResponseEntity<String> welcome() {

try {

Long.parseLong("adfsdhgk");

welcomeSuccessCounter.getAndIncrement();

return ResponseEntity.ok("Welcome to Micrometer Tutorial");

} catch (Exception e) {

welcomeFailureCounter.getAndIncrement();

return ResponseEntity.status(500).body("Oops.. Something went wrong.");

}

}

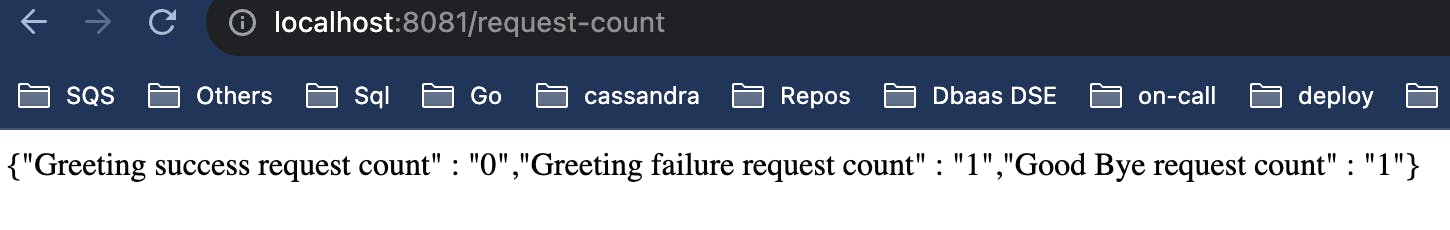

@RequestMapping("/request-count")

public String getHits() {

return "{\"Greeting success request count\" : \"" + welcomeSuccessCounter + "\"," +

"\"Greeting failure request count\" : \"" + welcomeFailureCounter + "\"," +

"\"Good Bye request count\" : \"" + goodByeCounter + "\"}";

}

Good Work. We included the failure count also.

Track failure reasons

I have to track multiple failure scenarios each with their own response status code and exception. The result will be look like this

{

"Greeting success request count" : "5",

"Greeting failure request count with 500 status" : "1",

"Greeting failure request count with 401 status" : "5",

"Greeting failure request count with 404 status" : "0",

"Good Bye success request count" : "2",

"Greeting failure request count with 500 status" : "0",

}

Nice try. But, I also like to have failure reason. If we continue to append the related field values in name, we will some cons. Example,

Measurement name will be long.

We might not get the values of the exact measurement without knowing all its fields (failure reason here).

It won't be generic enough for the data storage and activation.

Instead of having Greeting request count failed with status 500 with exception null pointer exception we can model the metric as a simple object with fixed metric name http_request_count with labels api=greeting, status=failure and reason=database_exception which will look like this.

[

{

"name" : "http_request_count",

"api" : "greeting",

"output" : "success",

"status" : "200",

"exception" : "none",

"count" : "10"

},

{

"name" : "http_request_count",

"api" : "greeting",

"output" : "failure",

"status" : "500",

"exception" : "null_pointer_exception",

"count" : "2"

},

{

"name" : "http_request_count",

"api" : "greeting",

"output" : "failure",

"status" : "400",

"exception" : "null_pointer_exception",

"count" : "1"

},

{

"name" : "http_request_count",

"api" : "good_bye",

"output" : "success",

"status" : "200",

"exception" : "none",

"count" : "1"

}

}

Now, we have the data in the cool model.

Why this measurements?

Let's assume this http_request_count falls under metrics category of observability's three pillars. We have to process the data to get benefits out of it. In most of the cases, we like to visualise this data to monitor the production application and to page the on-call persons if something goes wrong. For those activities, we need to scrape the data from application and store it somewhere. There are numerous database applications available to supports metrics storage and activations like CloudWatch, Datadog, Graphite, InfluxDB, JMX, New Relic, Prometheus, StatsD etc.

Why micrometer.io ?

So, Having the `http_request_count` metrics in the format which will be supported by the monitoring database we use is crucial.

Say I use this metric in statsD today, It will be in some format for the exporter like telegraph. If I want to change it to Prometheus tomorrow, Prometheus has its own format for measurements.

All the measurements I use with the format tied to database has to re-written in code. Micrometer.io provides us the vendor neutral Interface to use in your java application.

If your are spring application developer, you will be directly interacting with micrometer APIs most of the time.