Observe your software before scale it out

Encouraging developers to measure features before shipment

"Hope is not a strategy."

~ Famous Quote in Google SRE (Site Reliability Engineering) book.

On the same line, Data we measure should tell us the direction to approach any problem. Not our guess or Hope. Apart from the application of data in technical side of the software industry, Data Driven Decision Making (DDDM) is the trending key factor in Business decisions in the field of Organisational Management too.

Hold on. Getting into my point.

"If you can't measure it, you don't ship it to production in a scalable system."

It is as simple as that. I would say "Observability Driven Development" should get emerge in the area of Development.

Yes. you hear me right. Developers will get to share the responsibility of observations in one way or other.

Why developers should care about observability?

Observability is in the maintenance phase of software lifecycle for years.

We are in the era of changes. Especially in software frameworks, adaptability is the key to survive and grow. The toughest problem in this world is Unlearning the things you followed for years. It requires the mindset change. One such shift in observability is the having ownership of observation during the development phase.

As applications becoming cloud-native, Developers tend to do various roles like Testing, Operations, Security etc. Names in Operations are getting fancy like DevOps, DatOps, DevSecOps, MLOps, AIOps. Irrespective of whichever Ops one do or even if one do the Development alone, has to support and maintain the live application in production. It need not to be direct customer support or direct on-call activities. One who develop has to available to help the customer support person or on-call engineer.

Short story of the new feature

One new developer introduces the new API to query the data in the existing system. In the next automated deployment, the feature is pushed to production. On-call engineer got paged for high error percentage alert.

Assume the root cause of the error is not handling all the characters in the charset supported by database. Test cases in staging had only alphanumeric characters and so it passed in testing. In production, there are special characters in customer data.

But, the on-call engineer doesn't know any of this. When the overall error percentage is increased since deployment, he reverted the new build and fixed the incident.

There the conversation begins.

Developer: How can we not release this feature? The time of shipment is committed to customer.

On-call: What else I can do when this release affecting our availability of system?

Developer: I agree the time is correlating. But, Do you have any evidence to confirm the new API have caused the issue.?

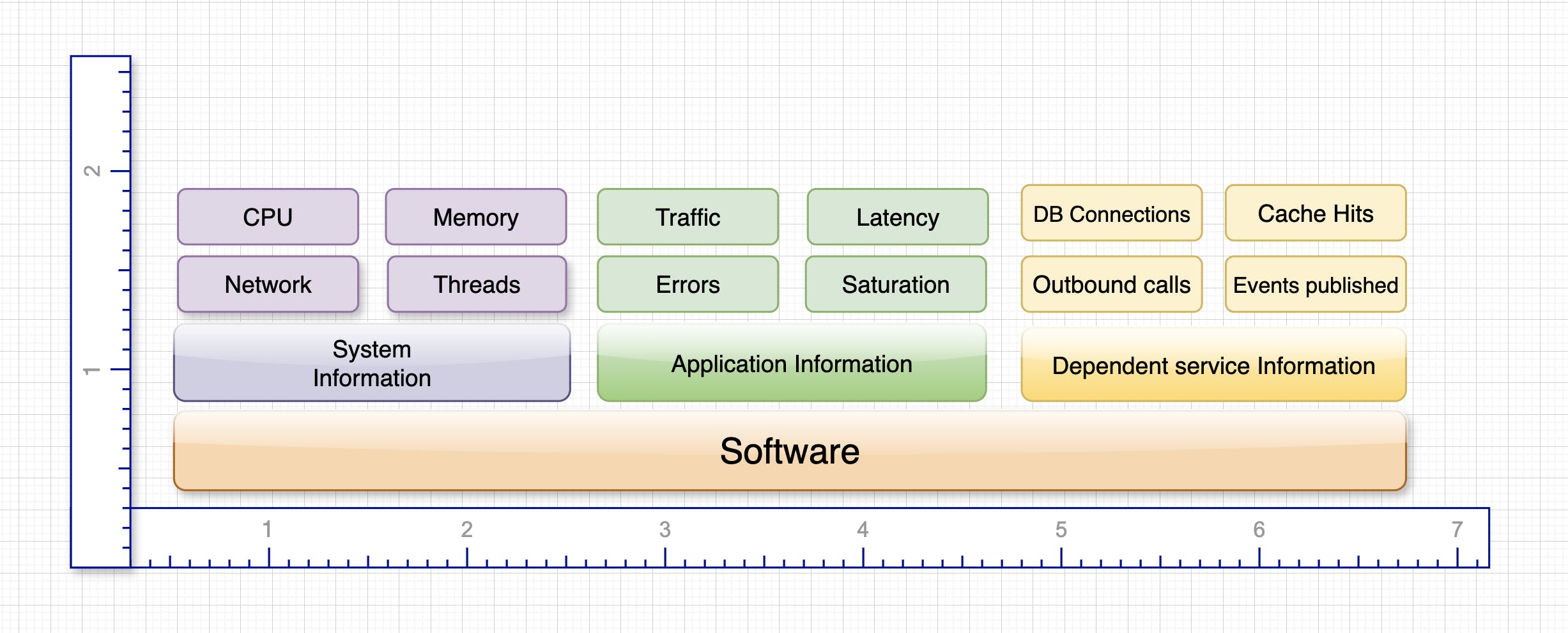

On-call: No. Did you enabled the metrics for the new API? (i.e., Do you have the evidence that it was working fine)? The Traffic and error metrics are available for the existing APIs. Those were fine.

Developer: Oops. Isn't handling metrics part of operational team?

On-call: Operational Team collects and stores it. Developers has to enable them in the first place. So, It is mutual. Please follow this guidelines page for any new API.

Guidelines page may states to adding @Timed annotation in java or @histogram.time() annotation in python or adding the new API URI in any constants which needs to be whitelisted. It doesn't matter how one do. What matters is "Having the responsibility to measure any feature one develop."

It is vital for any scalable systems. Developer should start thinking towards on this line to embrace the "Observability Driven Development".

How my new API is going to perform?

How many request my API serve.?

How many of them are getting failed by internal error?

How many of them are getting rejected due to client error?

Is it under SLA?

How my new event type is working in message driven application?

How many events get published.?

Does all events get received in consumer side.?

How will my consumer perform for this new message.? Etc.

Thanks for reading.